Building Quench AI: Track drinks with a photo

Building Quench AI: Track drinks with a photo

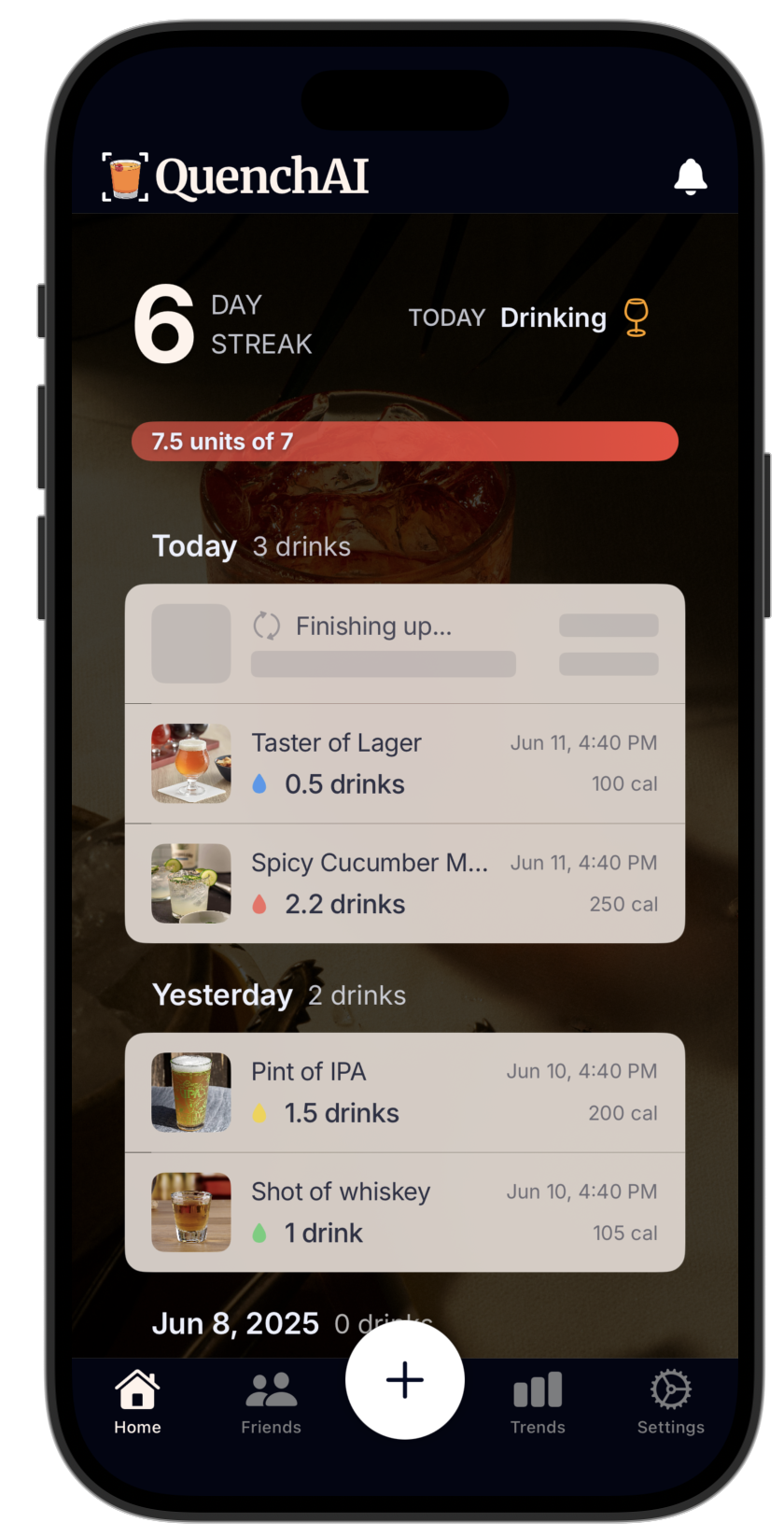

This winter, I built QuenchAI an iOS app that helps people precisely track their alcohol consumption using multimodal AI.

QuenchAI estimates standard alcohol units from a photo or natural language description of a beverage.

This post is a dive into the process of building QuenchAI, from the initial idea, through the technical implementation, to the final product.

- Motivation: Why I built QuenchAI

- Initial experiment: is this even feasible?

- Multimodal AI: options and tradeoffs

- Defining the outputs

- Prompt Engineering / Context Engineering

- MVP Client

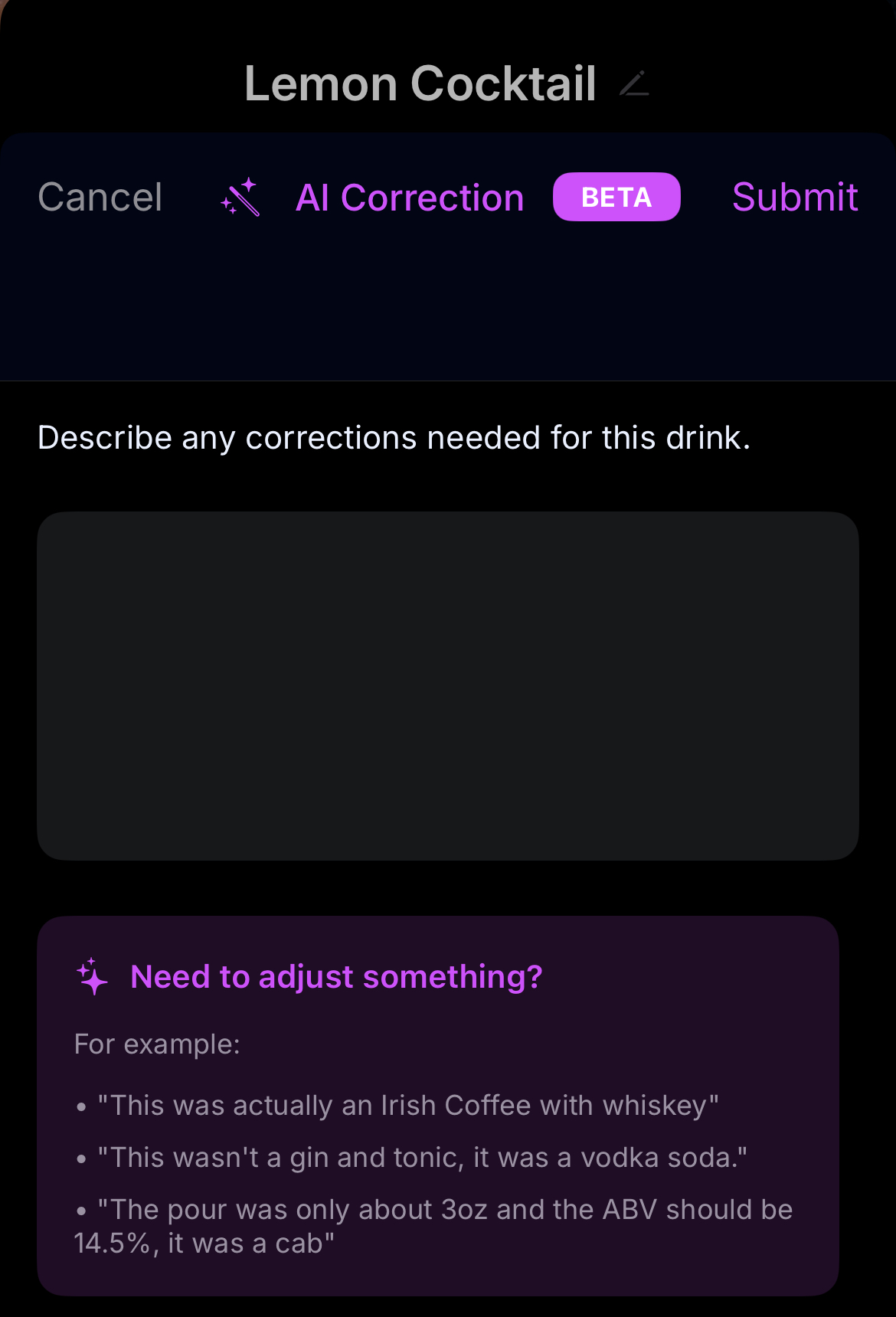

- Natural language revision: handling incorrect responses

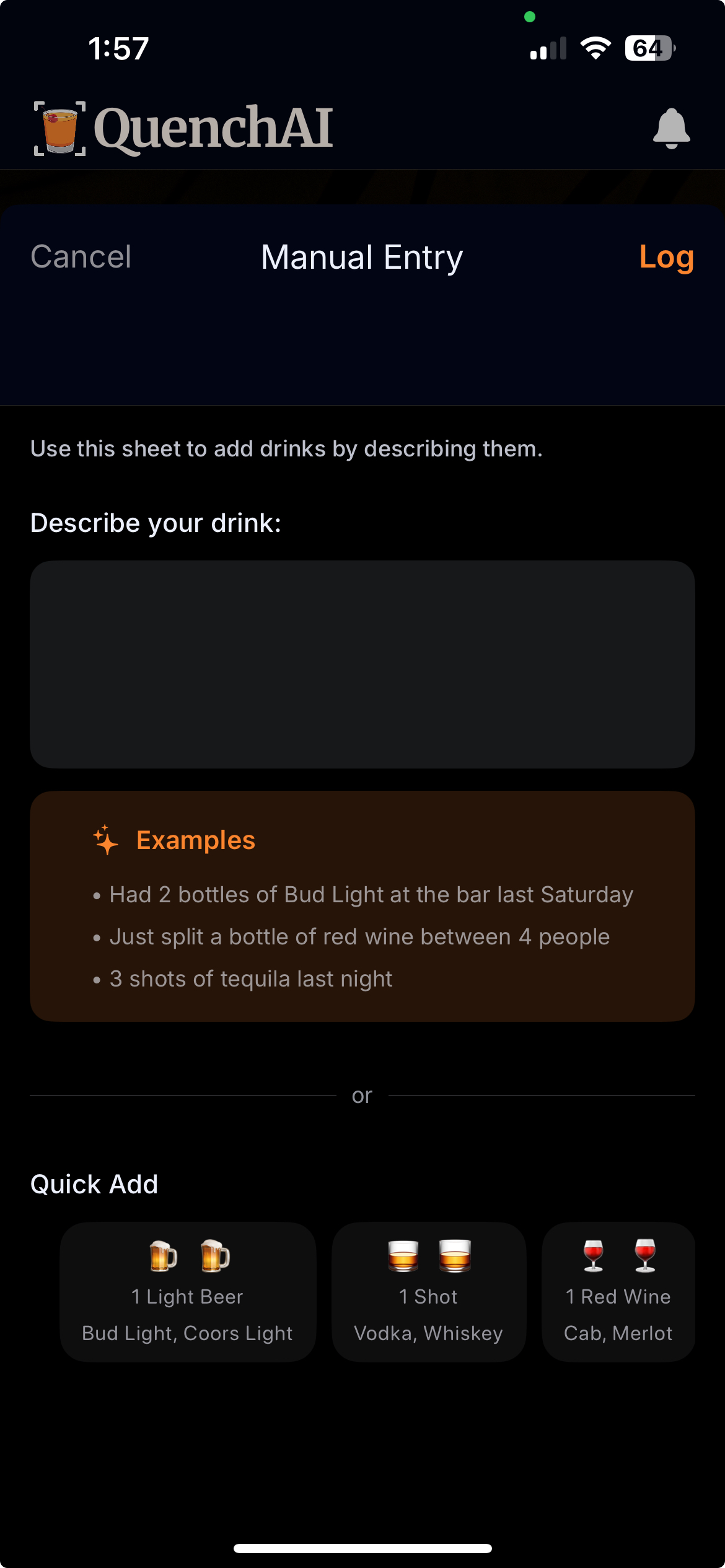

- Manual entry and error handling

- Retrospective thoughts: tradeoffs

- Evaluation and metrics

- Finishing the app

- Conclusion

Motivation: Why I built QuenchAI

Like many, I can enjoy a glass of wine alongside dinner or a well-made cocktail with friends, but I’m also aware of the research: even moderate alcohol consumption can harm health.

After hearing Dr. Andrew Huberman’s comprehensive podcast on alcohol, I wanted to get a better understanding on the true “alcohol units” in various drinks.

I knew a light beer had less alcohol than a double IPA, but I still treated a “drink” as a single unit: a beer, a glass of wine, or a standard cocktail. This was a mistake, as the variation in alcohol content even in common drinks is massive.

A “standard drink unit” is defined as 14g of pure alcohol, and by this metric a single drink served at a restaurant or bar can be anywhere between 0.5 to 3 “standard drinks”. That’s a pretty wide margin of error, especially when targeting specific research-based thresholds.

Given this definition, tracking standard drink units is actually nontrivial (especially on-the-go), so I wondered if there was an app that could do it for you. Just snap a photo or describe your drink briefly, and get a real, evidence-based estimate?

To my surprise: nothing like that existed. There were plenty of image-based calorie or macronutrient estimators, but no simple tool for tracking alcohol consumption.

Initial experiment: is this even feasible?

My first approach was simple: just send a photo to the most powerful multimodal LLMs (e.g. GPT-4o, Gemini 2.5 Pro, Claude 3) and ask “How many standard alcohol units are in this drink?”

The results were surprisingly decent with this naive approach. I was not surprised with the accuracy of canned or bottled beverages, but there were even some cases where the model was able to properly identify a cocktail from a photo. However, the results fell apart as I moved away from the most obvious cases and tested ambiguous glassware, photos with multiple drinks in-frame, or less common cocktails. The models’ general knowledge was impressive, but not enough for consistent, reliable outputs.

Based on my experience building products, I knew that the value from this technology would come from these more complex situations, and I couldn’t rely on a user’s photo to be perfect every time, so I started to think about how to build a system that could handle the nuances of real-world photos.

I primarily used Google’s AI Studio UI for iteration at this stage, since it provided the most convenient UI for iterating on a prompt or specific parameters and immediately re-run the inference.

Multimodal AI: options and tradeoffs

In my last project, the Mandarin Tone Trainer, I first looked for existing solutions, and when LLMs alone were not able to solve the problem, I explored alternative model architectures, and ultimately prepared a dataset to finetune my own model.

In trying to solve this drink tracking scenario, I first clarified the core sub-problems: recognizing the drink, inferring the volume, estimating the alcohol content, and ultimately calculating the standard units.

There’s a spectrum of approaches to building a system for this:

- Prompt engineering with foundation models: Simplest approach using GPT-4o, Claude 3, or Gemini 2.5 Pro with carefully crafted prompts. Fast to implement but potentially brittle on edge cases.

- Traditional computer vision pipeline: Object detection + classification models for drink/glassware recognition, combined with volume estimation algorithms. Would require training data but offers more control and lower inference costs.

- Fine-tune a multimodal LLM: this would be the most flexible and could provide the cheapest inference, but would require significant up-front costs and effort to prepare a training dataset.

- Build a Retrieval-Augmented Generation (RAG) pipeline: this could help by injecting factual knowledge about drinks and glassware, but would introduce infrastructure complexity, latency, and a new error surface.

In the spirit of pragmatism, I wanted to keep things simple and only add complexity when the simpler approach fails. After experimenting with Gemini 2.5 Pro, Claude 3, and GPT-4o, I found that I didn’t need fine-tuning, RAG, or any custom model architectures to solve this problem. I was able to get reliable results with a general-purpose multimodal LLM, and I was able to get even better results with prompting techniques.

This approach aligns with some recent trends in the “foundation model era” of AI-enabled product engineering. Large multimodal LLMs by their very nature have knowledge of many concepts, including drink types, glassware, typical alcohol percentages, and serving sizes as a result of their massive training data. With enough coaching and steering, the models should be able to handle the task readily.

With this approach, I would focus my efforts on prompt engineering (aka “context engineering”) rather than gathering and annotating a large dataset like the traditional computer vision approach for image classification.

I knew from experience that with the right context, I could leverage the pre-trained visual and textual representations within these large multimodal models. I needed to experiment with different approaches to systematically engineer the prompt to guide the model’s reasoning process.

Defining the outputs

The core task: convert a photo of a drink into standard drink units.

After trying (and failing) to coax the models into consistently converting an image of a drink directly into standard drink units, I broke the problem up into subcomponents, settling on a structured output that captures the essential elements with which I could then calculate the standard units:

- Volume: The liquid volume in ml (not the glass capacity)

- ABV: estimated alcohol by volume percentage (% alcohol)

These would be easier for the model to estimate, and with volume and ABV I could calculate the volume of pure alcohol arithmetically as follows:

standard drink unit = (volume in mL) * (ABV) * (0.789 g/mL) / (14g)This structured approach could better handle edge cases, like exceedingly large pours of wine or varying sizes of beer glass.

Given I expected to handle arbitrary user-generated content, ambiguity would be inevitable. What if a photo doesn’t even contain a drink? What if the user sends a drink of a non-alcoholic drink like sparkling water? What if the photo is too blurry or the drink is too small to be visible?

To help handle these cases, I added a “type” classification, where a photo would be classified as either an alcoholic drink type (e.g. beer/wine/cocktail), a non-alcoholic drink, or “N/A” (non-drink). This way, the system could gracefully handle cases where the photo was not a drink, and had a safe “error case” for when the model was unable to classify the photo.

This was a good start, but the output qualities were still lacking. I needed to do more to “guide” the model into the correct reasoning process.

Prompt Engineering / Context Engineering

Even breaking the inference task into volume, ABV, and type, there were still plenty of cases where the estimates were quite off. I needed to do more to “guide” the model into the correct reasoning process. Instead of just mindlessly adding instructions to the prompt, I relied on proven techniques:

Chain-of-Thought Reasoning (CoT)

Chain-of-Thought (CoT) prompting is a technique that encourages the model to process information step-by-step before reaching a conclusion. This method was notably published in the 2022 paper Chain of Thought Prompting Elicits Reasoning in Large Language Models, where researchers found that CoT reasoning is an emergent property of sufficiently large models. When simply asked to “think step-by-step”, LLMs demonstrated improved performance on diverse reasoning tasks.

For this application, I broke up the task into two specific phases, first the model would focus on the image, “thinking” about the image and recording observations, and afterwards it would “answer” the question relying on the observations.

This simple structural change had significant, measurable impact. Across my test images, this consistently improved both volume and ABV estimates. Furthermore, it nearly eliminated catastrophic failures where the model hallucinated details or focused on irrelevant/incorrect aspects of the image.

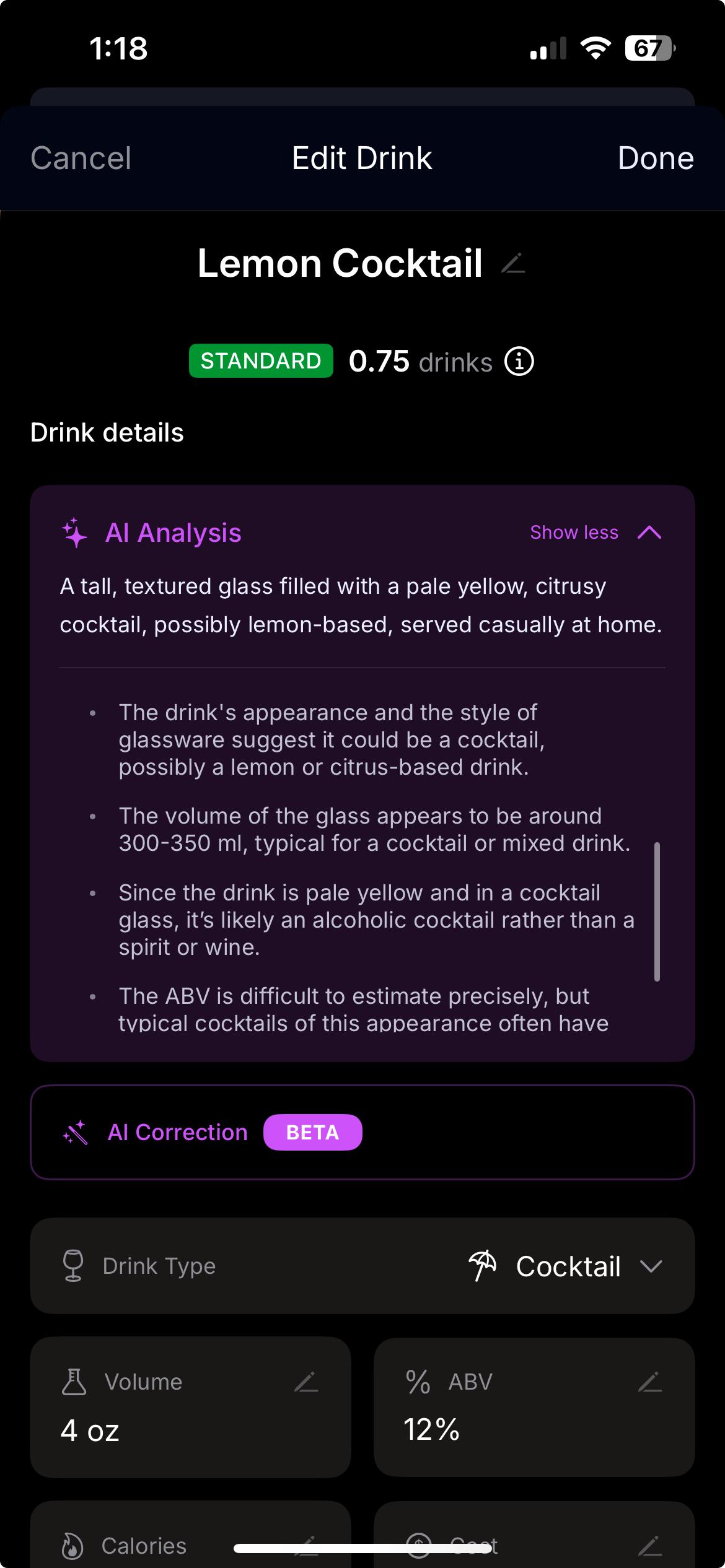

Here is an example of one particular test image and the impact on the quality of output:

Output without CoT:

Here's the breakdown of the drinks and the requested information:

**Identified Drinks:**

- Cucumber Margarita: The drink in the foreground, garnished with cucumber slices and a rim of salt.

- Cucumber Margarita: The drink in the background, garnished with cucumber slices and a rim of salt.

**User's Drink (Assuming the user is logging the drink in the foreground):**

- Volume: The glass appears to be a standard rocks glass, filled with ice and liquid. Estimating the liquid volume, it looks to be around 240 ml.

- ABV: A standard margarita contains tequila (typically 40% ABV), lime juice, and a sweetener. Assuming a standard margarita recipe with 1.5 oz (44 ml) of tequila in the 240 ml drink, the overall ABV would be around 7-8%.

Therefore:

- Drink: Cucumber Margarita

- Volume: 240 ml

- ABV: 7.5%This calculates to ~1.0 standard drink, a clear underestimate. The failure is twofold:

- Naive volume estimation: the model used the full capacity of the rocks glass, failing to account for significant volume displacement by the ice.

- Simplfied ABV calculation: the model failed to account for the additional ingredients in the margarita such as the orange liquer (e.g. Cointreau/Triple Sec) which contribute to the overall alcohol content.

Output with CoT:

Here's a breakdown of the drinks in the image and an estimation for the user's intended drink:

1. Identify the drink(s):

There are two drinks visible in the image. Both appear to be the same type of cocktail served in similar glasses. In the background, there's also a bottle which appears to be a spirit bottle, likely tequila given the type of cocktail.

2. Determine which drink the user intends to log:

The drink in the foreground, prominently featured and in sharp focus, is clearly the intended drink for logging.

3. For the user's drink (the one in the foreground):

**Observations and Step-by-Step Reasoning:**

- Glass Type: The drink is in a rocks glass (also known as an old-fashioned glass or tumbler). These glasses typically hold between 8-12 ounces (240-360 ml).

- Contents: The glass is filled with ice cubes, a clear to slightly cloudy pale yellow-green liquid, and garnished with cucumber slices and a salt rim. This suggests a cucumber margarita.

- Fill Level: The liquid level is quite high, reaching almost to the top of the ice, which nearly fills the glass.

- Ice Displacement: A significant portion of the glass's volume is occupied by ice.

**Volume (estimated):**

- A common rocks glass capacity is around 10 oz (approx. 300 ml).

- Given the amount of ice, the liquid volume in the glass is likely about half to two-thirds of the total glass volume.

- Let's estimate the total liquid volume, accounting for ice displacement. If the glass holds 300ml, and half to two-thirds is liquid, that would be 150-200ml.

- A standard margarita liquid volume (without ice) is often around 5-6 oz (150-180 ml). This drink appears to be a standard-sized cocktail.

- Therefore, an estimated liquid volume for this cocktail is 180 ml.

**ABV (estimated):**

- Margaritas are typically made with tequila, which is around 40% ABV (80 proof).

- A classic margarita recipe usually contains 1.5-2 oz of tequila.

- Let's assume 2 oz (60 ml) of 40% ABV tequila.

- The remaining volume (180 ml - 60 ml = 120 ml) would be lime juice, orange liqueur (e.g., Triple Sec), and potentially a sweetener or other mixers. These mixers are non-alcoholic or have lower ABV (like Cointreau or Triple Sec, which are around 20-40% ABV, but used in smaller quantities than the tequila).

- If we consider the tequila to be the primary alcoholic component:

- Alcohol volume = 60 ml * 0.40 = 24 ml

- Total liquid volume = 180 ml

- ABV = (Alcohol volume / Total liquid volume) * 100 = (24 ml / 180 ml) * 100 = 13.33%

- Standard margaritas typically range from 12% to 18% ABV, depending on the exact recipe and strength desired. Given the visible amount of liquid and common cocktail ratios, 13-15% is a reasonable estimate.

- Therefore, an estimated ABV is 14%.

**Final Answer:**

- Drinks in the image: Two cocktails (likely cucumber margaritas) and a bottle of tequila in the background.

- User's intended drink: The cocktail in the foreground.

- Volume (estimated): 180 ml

- ABV (estimated): 14%

This calculates to ~1.4 standard units, a far more plausible estimate. The CoT prompt forced the model to perform two critical reasoning steps:

- Ice displacement: explicitly acounted for ice displacement, reducing the volume estimate by 25%

- Ingredient List: identifying that there are ingredients in this drink other than the primary spirit of tequila

Critically, while the model identified the presence of Cointreau/Triple Sec, it still failed to correctly incorporate their alcohol content into the final ABV calculation. The reasoning trace made this failure mode obvious, and more importantly, debuggable.

Dual benefit of CoT interpretability

The most immediate benefit of the CoT trace was its power as a debugging tool. The margarita example proves the point: the trace showed the model knew about orange liqueur but failed to use that knowledge in its final calculation. This insight was crucial. It told me the problem wasn’t a lack of knowledge, but a failure in the reasoning process, which could be iterated on.

This transparency also had a surprising user-facing benefit. As I would later discover in user testing, showing the reasoning trace made the feature feel more trustworthy and less like a “black box,” a point I’ll return to later.

Note: It’s important to mention that while CoT reasoning improves interpretability, it doesn’t offer a complete picture of the internal workings of an LLM, and reasoning traces are not 100% reliable.

Few-Shot Prompting

The last experiment showed that the model’s visual capabilities were adequate for identifying many of the important visual features, and the internal knowledge strong enough to know basic cocktail recipes.

The problem was: the models could not be relied on to consistently integrate these into an air-tight reasoning chain.

I needed to demonstrate exactly how exactly they should be using their reasoning traces and observations to make good estimates. While the general training data likely included photos of drinks in common settings, it is unlikely that there was much focus on visually estimating standard alcohol units, and as a result, the results varied greatly.

To “teach” the model, I used in-context learning, specifically “multishot prompting,” prepending exemplar answers with reasoning and analysis on test drinks to demonstrate the intended process for completing the drink analysis task.

This technique is called “multishot prompting” or “few shot prompting”, and it is a technique that I had used extensively when building the document understanding pipeline for Socius Legal.

I was familiar with the “discovery” of in-context learning from the 2020 paper Language Models are Few-Shot Learners, (aka the GPT-3 paper), where researchers at OpenAI showed that large language models could learn new tasks “on-the-fly” from one or more examples provided directly in the prompt, without requiring any additional training or fine-tuning of model weights.

In the spirit of simplicity, I wanted to evaluate the impact of spending a few hundred tokens on the prompt, rather than a more expensive approach like fine-tuning or a slower approach like a multi-prompt flow for drink analysis.

Separately, I knew that when it eventually came to integrating these inference results into a product, I would need to start working towards more structure in the outputs, so I began enforcing specific delimiters, as you can see in the few-shot prompt snippet below:

Excerpt from prompt:

## Example:

Image Context:

- A round table with three drinks

- One is clearly closer to the user, two others further away (maybe corresponding to other seats)

Response:

<thinking>

- The image shows three drinks, but the most central and foreground drink is the user's.

- The user's drink is a rocks glass with a pale green liquid, garnished with red powder and a lime wedge.

- Based on the appearance, this could be a margarita-style cocktail, with tequila, cointreau, and lime juice. The red powder is likely a chili salt, suggesting a spicy margarita.

- Typical recipe: 2 oz tequila (40% ABV), 1 oz cointreau (40% ABV), 1 oz lime juice, with ice melt contributing ~1 oz.

- Estimated total volume: 5 oz.

- To calculate the ABV, we need to consider the volume of each ingredient and its ABV.

- The tequila contributes 2 oz * 40% = 0.8 oz of alcohol.

- The cointreau contributes 1 oz * 40% = 0.4 oz of alcohol.

- The lime juice contributes 1 oz * 0% = 0 oz of alcohol.

- The ice melt contributes 1 oz * 0% = 0 oz of alcohol.

- Total alcohol volume: 0.8 oz + 0.4 oz + 0 oz + 0 oz = 1.2 oz.

- Total volume: 5 oz.

- ABV: (1.2 oz / 5 oz) * 100% = 24%.

- Calories: 2 oz tequila (130 kcal/oz) + 1 oz cointreau (95 kcal/oz) + 1 oz lime juice (0 kcal/oz) + 1 oz ice melt (0 kcal/oz) = 225 kcal.

</thinking>

<drink>

{

"volume": "5 oz",

"abv": "24%",

"calories": 225

}

</drink>When provided examples like this, LLMs are good at generalizing and emulating the patterns, including the reasoning. This was a huge improvement over the previous iteration, and the consistency of outputs was now in a satisfying place.

That being said, but there were still cases where the outputs were inaccurate. This is when we were exceeding capabilities of the model’s general knowledge, for example non-standard glassware, specialty cocktails, or specific ABV values for certain drinks.

Including Domain knowledge

However, even iterating on the chain-of-thought and multi-shot prompts, there were still some quality issues.

Because of the diversity of drinks and the inherent vagueness of user photos, I needed to inject concrete domain knowledge directly into the model’s context. This included glassware sizes, standard drink recipes, and ABV values for various drinks and ingredients.

Instead of using a full RAG pipeline, which would add complexity, cost, and latency, I opted for a static “cheatsheet” prepended to the prompt. This decision was driven by several factors:

Example snippet from the “cheatsheet”:

Refer to the following standard glassware capacities, as needed:

- Beer

- Pint Glass (US): 475 ml

- Standard Beer Mug: 500 ml

- Large Beer Mug or Stein: 1000 ml

- Tulip Glass: 400 ml

- Tasting Glass: 100 ml

- Wine

- Red Wine Glass: 300 ml

...

## Cocktail Recipes

Margarita (Classic)

- Ingredients:

- 2 oz tequila (40% ABV)

- 1 oz Cointreau or Grand Marnier (40% ABV)

- 1 oz lime juice

- Optional: 0.5 oz simple syrup (if specified as sweeter)

- Volume: 120 ml (plus ice melt ~30 ml = 150 ml)

- ABV: (2 oz tequila * 40% + 1 oz Cointreau * 40%) / 4 oz = 20%

- Calories: 2 oz tequila (130 kcal) + 1 oz Cointreau (95 kcal) = 225 kcal

...The inclusion of this domain knowledge drastically improved quality of the model’s responses, especially for non-standard drinks, where the model was able to use the provided examples to make better, more consistent estimates.

Why not RAG? For this use-case, the knowledge base was small and static—only about 2000 tokens. A proper information retrieval system would have been overkill, better suited for scenarios with large and/or dynamic knowledge bases that exceed context window limits.

The simplicity trade-off: Injecting domain knowledge is essential for reliability. Some teams reach for RAG (retrieval-augmented generation) by default, but that’s often unnecessary complexity. My knowledge base comprising standard glassware sizes, common cocktail recipes, ABV lookups would fit comfortably within 2000 tokens and could reasonably be included directly in the prompt. This approach is economical, introduces no latency, and doesn’t come with any of the increased error surface or reliability risks of incorporating a semantic search stack for a static, tiny dataset.

The cost of this simple solution was that updates would require backend deployments rather than updating a separate datastore, but the benefits of avoiding additional infrastructure complexity far outweighed this minor inconvenience.

Many projects in applied AI spiral into unnecessary complexity, with practitioners adding RAG, vector databases, and elaborate retrieval systems when a thousand or so tokens of static context would solve the problem more elegantly.

MVP Client

At this point, I had a performant and high-quality prompt, and I was excited to get an end-to-end MVP ready for full integration testing and better iteration. I wanted to keep the app simple and focused: a native iOS app with a simple REST API backend. I was committed to not overengineering the solution, instead focusing on well-supported technologies and patterns that I was familiar with to keep the codebase simple and easy to iterate on.

iOS Client

This was my first iOS project since 2012, so I needed to familiarize myself with the Swift language and SwiftUI. The learning curve was manageable thanks to excellent documentation and the declarative nature of SwiftUI, which felt familiar coming from React development.

For local persistence, I used SwiftData for its seamless integration with SwiftUI, but kept most of the business logic in the backend to maintain a clean separation of concerns. The iOS app primarily handled:

- Camera integration and photo capture

- User experience for viewing and editing drink entries

- Local caching of drink entries for offline viewing

Backend

I built the backend as a Django REST API, leveraging my existing familiarity with Python and Django to move quickly. The core components were:

- LLM Integration: Routed through OpenRouter, allowing me to easily experiment with different inference providers and models without changing application code

- Data Persistence: SQLite database for storing user accounts, drink logs, and analysis results

- Authentication: Simple JWT-based authentication with refresh tokens using Django REST framework

- Image Processing: Basic image optimization and storage for the photos users submit

Once I had both the backend and iOS client working together, I could start testing the app end-to-end with real photos in various lighting conditions, different drink types, and edge cases that hadn’t emerged during my initial prompt engineering phase.

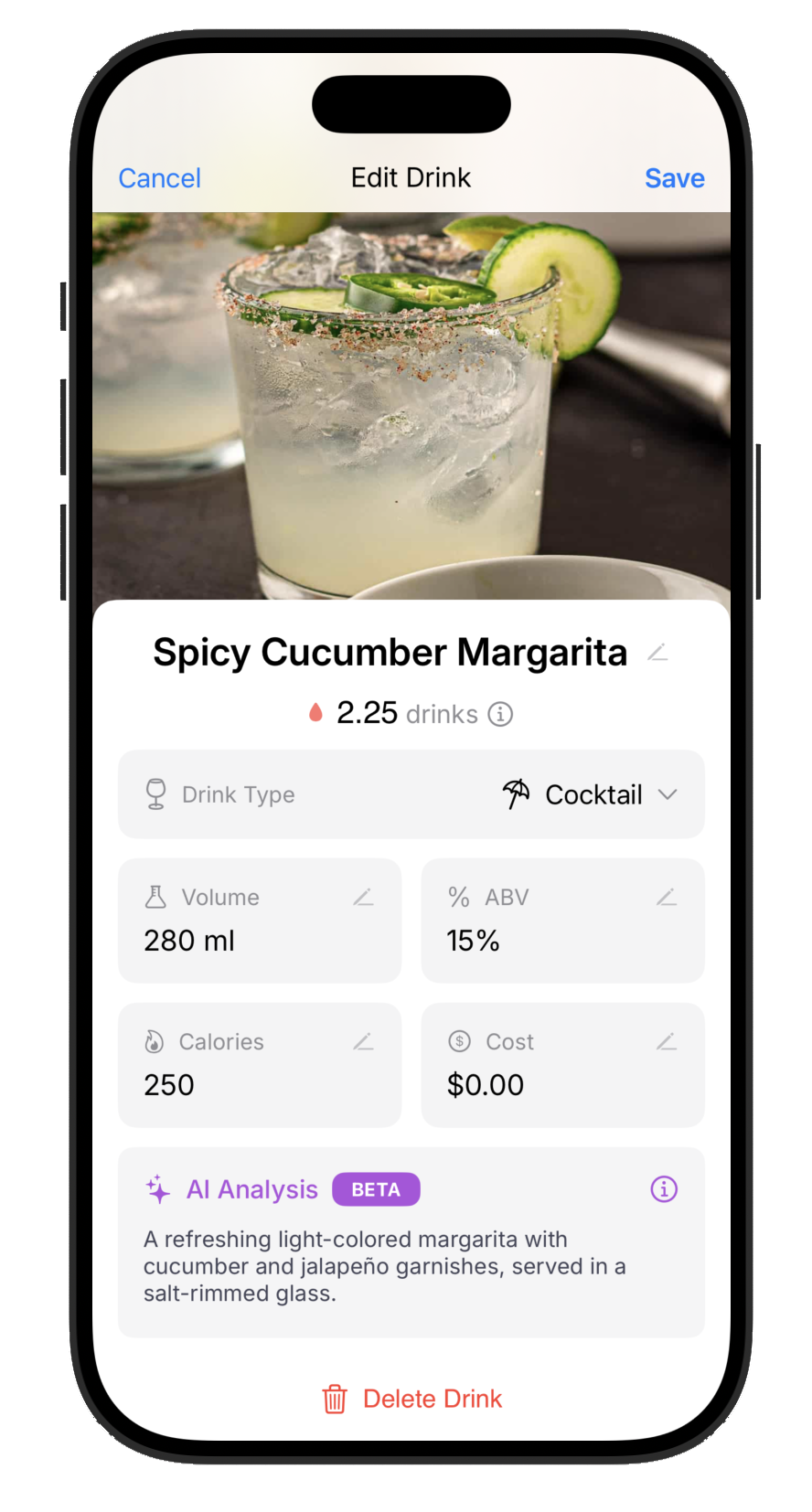

Natural language revision: handling incorrect responses

Overall, I made many fixes and refinements to the overall system, capturing edge cases and improving the model’s ability to handle a wide range of photos. The most important realization, however, was that no pure vision solution could ever be perfect for this scenario, so I needed to build a human-in-the-loop option to handle the cases where revisions were needed.

Even with the various prompt engineering and domain knowledge, I wasn’t able to solve for the inherently impossible or unlikely cases such as distinguishing the type of beer in a standard glass. Even a trained sommelier wouldn’t be able to consistently distinguish between different varieties of white wine without help of other senses like smell and taste.

The default user experience would have user’s reviewing and editing the incorrect fields in the form-style detail view, a tedious manual process. I wanted to smooth this process out, and considered how I would do it with a human: a natural language revision, like “actually, this was a riesling” or “this gin and tonic was actually a double”.

So I wrote a new LLM flow and stood up a new API route allowing for natural language corrections to be applied to previously logged drinks. The system would then adjust any incorrect fields and provide an updated reasoning trace based on the feedback.

This revision flow provided both an immediate usability win, while also providing a potential data flywheel. At the least, these could be reviewed for addition into our existing test cases, but in the medium-term, these manually annotated data samples (photo -> incorrect analysis -> feedback -> corrected analysis) could be used to fine-tune a smaller, cheaper model in the future.

Manual entry and error handling

While the photo analysis is the key feature of QuenchAI, not every drink is going to be photographed. Still, I wanted the application to support comprehensive tracking, so building off of the revision idea, I added a natural language manual entry flow, enabling users to add one or more drinks by describing in natural language.

For example “last night I had two Coors Lights at around 10” should be enough for the system to generate two drink entries with the correct standard units, and with the correct timezone-aware UTC timestamp.

In the interest of simplicity, the manual entry flow was built to be a simple text field with the backend handling conservative defaults to smooth out the user experience.

In case the drink was logged incorrectly, the user could simply use the existing revision flow to correct the fields.

Retrospective thoughts: tradeoffs

Building with cloud-based LLM providers introduces practical constraints that need to be carefully considered:

Cost Management Each inference call costs approximately 500 tokens out and 5-6k tokens in, even with the in-context learning examples. This were reasonable during development, but at scale these costs could become significant with the most expensive models. My constraint was to be able to run inference for less than $0.005 per image, which Gemini 2.5 Pro slightly exceeded.

I experimented with lower-tier models and found them to be roughly equivalent in quality but faster, which helped balance cost and performance. Originally the model used was Gemini 2.5 Pro, followed by Gemini 2.5 Flash, but after the release of GPT-4.1 nano, it became the clear winner for our tasks, where I am able to run ~500 inference calls for $0.01.

Also, in order to take advantage of prompt caching, I ensured that the image was added as the last element of the prompt, so the static parts of the prompt could be automatically cached by the inference provider, when supported.

Latency Response times varied significantly: 8-9 seconds for reasoning-heavy models, 1-2 seconds for faster alternatives. Each additional prompt in a multi-step flow multiplies this latency, which creates a poor user experience if not handled properly.

To address this, I implemented asynchronous processing where the app doesn’t hang waiting for the response. Instead, users return to the dashboard immediately, and drink entries show an “analyzing” state that updates dynamically when the response arrives. This pattern keeps the app feeling responsive even when the AI processing takes time.

Additionally, I kept the main image classification task as one large inference rather than a pipeline of multiple smaller API calls. The risk of this decision was quality, but I was able to get good results with the current model, and the cost savings were significant. In the future, this can be an area for improving quality at the expense of latency.

Privacy User photos and drink consumption data are sent to third-party providers (e.g. OpenAI, Google). While I determined this data wasn’t sensitive enough to warrant zero data retention policies, this is a meaningful consideration for users concerned about privacy.

The app’s privacy policy clearly states that user photos are sent to third-party AI service providers for analysis, and I’m careful not to send any personally identifiable information (names, emails, etc.) alongside the image data.

For privacy-sensitive inference, I would explore the existing zero-data retention offerings from major inference providers, and also could experiment with local models for some or all of the classification tasks.

Scalability Using cloud-based LLMs for every user action raises practical challenges around API costs, inference latency, and reliability. While prompt engineering and static knowledge bases keep inference cheap and fast for a small user base, the economics change dramatically at scale, especially if we need to use more expensive models.

Evaluation and metrics

Testing

I built a scrappy set of test images comprising different scenarios as part of measuring performance and quality of various models. The test set included:

- Easy cases: Clear photos of common drinks (beer bottles, wine glasses, standard cocktails)

- Ambiguous cases: Multiple drinks in frame, unusual glassware, poor lighting

- Edge cases: Partial pours, non-standard serving sizes, craft cocktails

- Failure cases: Non-alcoholic drinks, food items, empty tables, blurry photos

Key Metrics

I focused on these key metrics when evaluating the end-to-end system:

- Classification reliability the model should be able to handle the vast majority of photos, and the response should be parsable and usable. This means that the user should always be left in an actionable state, with no silent failures or unrecoverable issues.

- Classification accuracy the model should be able to correctly identify the drink type (e.g. beer, wine, cocktail, non-alcoholic, or N/A). If a drink is classified as the correct type, this ensures that users can always end up with an output that can be revised using the revision flow.

- Volume accuracy: how close the model estimated drink volume to the actual measured volume.

- ABV accuracy: how close the model estimated drink ABV to the actual measured ABV.

Areas for Improvement

With more time and resources, I would have liked to have:

- Larger test set: Expand to 200+ images with professional annotations

- Model comparison: Systematic testing across different LLM providers and model sizes

- On-device inference: Experiment with smaller models for offline and private usage

- Automated testing: Integrate evaluation into the CI/CD pipeline to catch regressions

Finishing the app

I shipped a beta using TestFlight to friends and family, which revealed several interesting insights from real user behavior and backend log analysis.

Users loved the reasoning traces. This was a surprising finding: many users were genuinely excited to read through the model’s step-by-step reasoning process. Based on this feedback, I gave the reasoning traces a more prominent position in the detail view UI. The transparency made users feel more confident in the accuracy of the results, and when the model was clearly wrong, users were less frustrated because they could see exactly where the reasoning went astray.

Photos were better than expected. People generally took careful photos, which is not what I had expected. I believe this is because users are generally interested in getting good outputs, as a result, most of the photos were taken with a clearly centered drink, and identifying features prominent and in-focus.

Conclusions

QuenchAI proved to be a fun, educational project. I learned a lot about the capabilities and limitations of today’s multimodal LLMs, as well as some practical experience on the end-to-end development of a modern mobile application.

- CoT for mech interp and user experience

- UX matters: human in the loop as a satisfying way to smooth over the edges of the issues with AI (dont make it a chore!)

- clever ways to use data to improve over time

As someone who has fallen into over-engineering and premature optimization traps, I was happy that I was able to ship a simple, working app that provides unique value for a niche complex task.

It is especially satisfying to see the backend metrics, observing users who are using the app daily including family, friends, and strangers who found the app through the App Store.

You can find QuenchAI for iOS on the App Store here